DNA is not just a molecule my dear testers, it is the blueprint of what something becomes. When I say all of you testers need to seed this “DNA” into every GenAI prompt, I mean it quite literally. Because how you structure your test prompt shapes what it becomes– and what it delivers back to you and to the customers.

The False Promise of “Just Ask”

We were told GenAI would be as easy as typing in a sentence. “Generate test cases for the payment screen”, we asked. And GenAI responded with generic, shallow, and sometimes comically wrong suggestions. For many testers, this was their first hard lesson in prompt engineering: clarity is currency, and vague inputs lead to useless outputs (the prominent Garbage-in Garbage-out thing resurrecting). In my experience designing over 500 real-world prompts across different testing contexts/domains, I have seen what works, what fails, and why prompting is now a DNA-level skill more than ever.

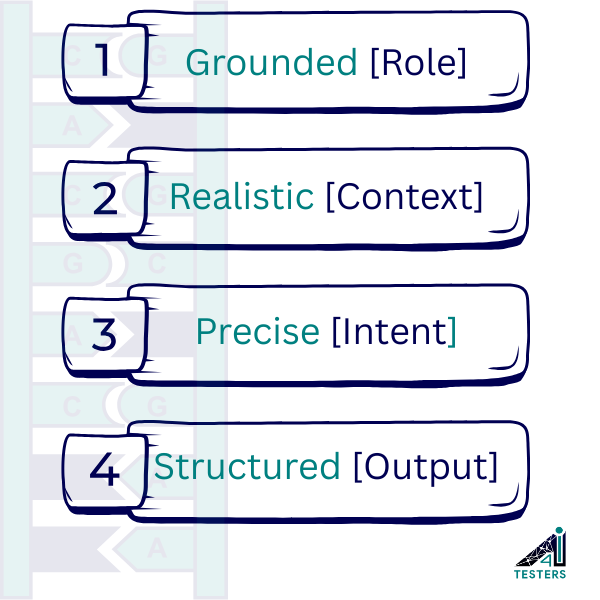

The DNA of a High-Quality Testing Prompt

Here is a quick snapshot showing how clarity changes everything:

Example 1 – Functional Testing Prompt (Poor vs. Improved)

| Prompt by the Testers | Outcome from the GenAI Tools |

|---|---|

| “Write test cases for a food delivery app”. | Vague and broad, leading to generic results. |

| Role: Functional Tester focused on order placement workflows. Context: A mobile-based food ordering application where users browse menus, customize items, and place orders for home delivery. Task: Generate test cases specifically for the cart and checkout flow. Guardrails: Focus on item quantity changes, pricing recalculation, and optional coupon application. Output Format: Numbered list with each test case containing preconditions, steps, and expected result. Prompt: “As a functional tester working on a food ordering application, generate 15 test cases for the cart and checkout workflow. Focus on scenarios involving item quantity changes, real-time price updates, and coupon application. Present the output as a numbered list including preconditions, steps to execute, and expected results.” |

Targeted and ready to use in test documentation. |

Example 2 – Security Testing Prompt with Few-Shot (again, Poor vs. Improved)

| Prompt by the Testers | Outcome from the GenAI Tools |

|---|---|

| “Write security test cases for login screen on our internal portal”. | Vague, no depth beyond kindergarten thinking. |

|

Role: Security Tester with awareness of internal threats and misconfigurations. Prompt: |

Contextual, detailed, and consistent with expected testing depth, emulating hackers. |

Needless to say, good testing prompts are your babies:

- You must define the tester’s role with enough specificity so the GenAI understands the testing lens you want it to adopt.

- You should always include the system context… what type of app it is, who uses it, and what the feature does. And yes, repeating things for clarity is perfectly fine (and has special advantage in GenAI).

- You need to name the “attribute” of Quality to focus on, such as functional, security, usability, or performance… so that the prompt stays grounded.

- Tell the model what format you want the output to be in, whether it should be a markdown table, plain list, or structured JSON.

- And whenever possible, input a short example. That one sample often works better than a paragraph of instructions.

Patterns That Worked for Me and My Students

- I found that role-based prompts always anchored the output with better relevance and coverage.

- Including one or two example test cases made a visible difference in structure and tone, especially for beginners.

- Structured outputs like tables or numbered lists help keep responses usable in test plans or reports (and CSVs that data-drive our test automation scripts).

- I recommend splitting large testing tasks into smaller prompts… like start with base cases, then expand to edge or negative ones, and don’t ask for everything in one shot.

Patterns That Failed for All of Us

- Prompts that skipped the tester’s role or the testing phase usually gave generic, almost laughable responses.

- Trying to ask for test cases, test data, and bugs all at once made the model behave unpredictably– it is too much cognitive like how we feel when multitasking under pressure.

- Using placeholders like <feature> without giving the model a clue of what they represent confused the context entirely.

- Omitting the test phase (e.g., UAT vs. Performance) often led to functional tests when you needed something deeper.

My Sincere Prompt Engineering Tips for Testers

- Speak to the AI just like you would brief a junior tester– role, scope, focus, and format all matter.

- Placeholders are powerful, but only when paired with sample values or clear descriptions of intent.

- Define the output style upfront and do not leave it to chance or default settings. As I said, repeat things.

- Don’t mix multiple tasks into one prompt. Be surgical. You can always chain prompts intelligently later. Right?

- Think of prompt crafting as iterative. No great test prompt is born on the first try. It evolves, just like test cases or CRs of your AUT itself.

Tooling – Where to Practice and Scale

- I use tools like ChatGPT, Claude, and Perplexity to experiment freely and compare responses across LLMs. I do have my own custom-trained LLM bed as well (which you will all get to befriend in a few weeks).

- Prompt evaluation frameworks like Promptfoo and Ragas help me measure precision and quality of test outputs.

- I also rely on Python-based prompt runners that plug into our automation flows, making GenAI test-ready.

- And yes, I reuse and version all my good prompts, thanks to prompt libraries like the one we are building at my Ai4Testers™ Knowledge Platform.

Please Treat Prompts as Our Better Halves

After a flurry of workshops, whiteboard debates, and long chai conversations with the first chatbot ever, ELIZA, and my overall 30 years of AI experience, here is what I truly believe now:

Prompts are not just digital commands. They are our better halves, more so in this new GenAI journey. They listen, learn, adapt, and if crafted with care, they become smarter than we imagined.

I saw testers stare at the screen like they were praying for magic to happen, trying to type something that “just works”, like the model would magically read their mind. It reminded me of how we sometimes explain things to family members; half in words, half in hope, expecting full understanding. That never works. Neither does a half-baked prompt.

Someone asked me,

“Ashwin, can one prompt really travel across tools, teams, and test phases?”

Yes, if it carries context, clarity, and character– it will hold its ground anywhere.

Another question I keep hearing,

“Should we really treat prompts like code?”

My answer is, if you cannot trust it enough to reuse it next week, then it was never ready today. So yes, because good prompts are very much like good code.

Prompts carry your testing DNA my dear testers– your way of thinking, your lens of Quality. And like any strong partnership, they need nurturing. Reuse them. Refine them. Respect them.

So tell me…

What is the one prompt you are secretly proud of?

And the one that made you laugh out loud with its weird response?

Don’t want to tell me and act smart? OK. Atleast answer yourself. 🙂

That is where it starts. With honesty, humor, and a healthy dose of curiosity. Is it not our DNA?

Let us not just write prompts. Let us raise them. It is our DNA, no?

– Ashwin Palaparthi

#FutureReadyQE #PromptCraft #Ai4Testers